Image Classification Principles

Reading time

Content

Goal: classify scene objects or estimate object model parameters.

Scene: It encompasses the physical surface under study. In the context of remote sensing, these are the actual physical entities or objects, along with their surroundings, that the sensor observes. Object surfaces are sampled through the IFOV of sensor arrays. For example, in an agricultural context, the object surface could contain, e.g. pistachio trees, canals, roads, grasses, and backgrounds such as soils and rocks.

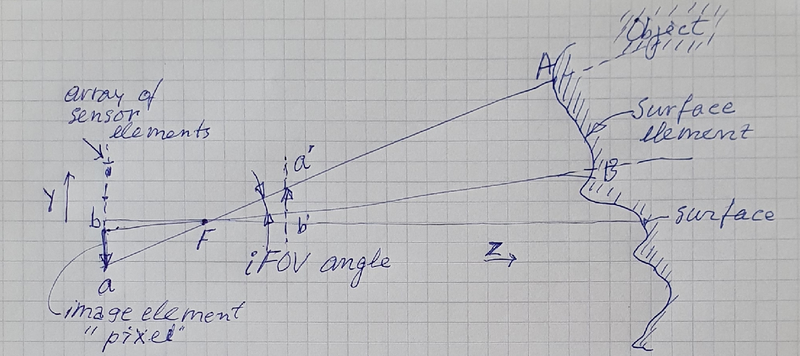

Scene objects: Scene objects are physical objects and their surroundings that exist in the real world (3D) and are the subject of study. The surfaces of these objects are sampled through the IFOV of sensor arrays. The intersection of the "solid" IFOV cone with an object surface is projected onto a sensor element, and the photon counts from this intersection are represented as image elements or "pixels".

Figure 1 illustrates how an imaging system captures photons from a specific area of an object. The "IFOV angle" (Instantaneous Field of View) represents the narrow cone of vision that a single "pixel" or "image element" on a sensor array sees. When this cone intersects with a physical "object surface," the photon from that specific point on the object (referred to as “Ground Resolution Cell - GRC”) is directed to and registered by a single sensor element.

Figure 1. This diagram illustrates the intersection of the “solid” IFOV cone with an object surface, which is projected onto a sensor element of an array of sensors (AB 🡪 b,a). The area AB, which represents the sampled area of the object surface, is also referred to as the Ground Resolution Cell (GRC). Photon counts on ab are represented as image elements or “pixels” in the plane a’,b’. The IFOV is not a constant, but rather changes based on the sensor's position relative to the focal point, indicating a dynamic relationship between the optical setup and the captured image data.

Image elements (Pixels): Arrays of sensor data can be represented as arrays of pixels (image elements).

Spectral features: Sensor arrays count photons (also referred to as 'Digital Numbers - DNs') in distinct spectral bands, which can be converted into spectral features.

Spatial features: Connected (adjacent) image samples form regions with spatial features (properties).

Object classes: Object classes or parameters are estimated from prior knowledge (GIS) or previous results, as well as spectral and spatial features.

Estimation is based on causal and/or statistical models requiring supervised sampling.

Training samples: Training samples are collected using training areas, also known as Regions of Interest (ROIs). ROI are selected on homogeneous areas, and can be labelled with class labels or a cluster index.

Clusters: Clusters are defined as adjacent points in a feature space (Spectral features).

Parametric or nonparametric: The density of points in feature space is modelled by statistical distributions (parametric or nonparametric).

Image regions: Image regions have spatial properties (features) that can be used for selection.

Training sessions: In training sessions, there is interaction between spectral and spatial features.